Requirements

For gathering and refining requirements of the project, we liased with our client, NTT Data, until common ground was found and realistic goals were set for the project. We conducted one in-person meeting and all other meetings were done virtually over Skype.

MoSCoW List of features

Must Have

-

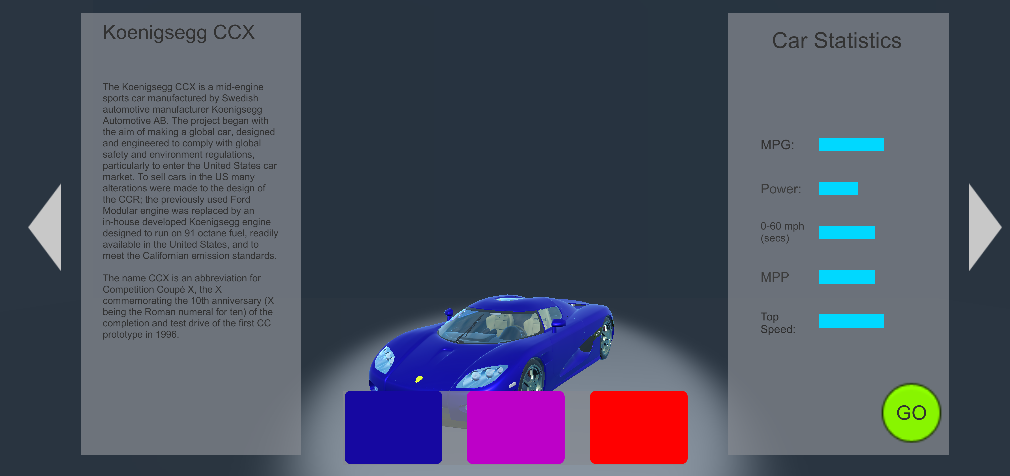

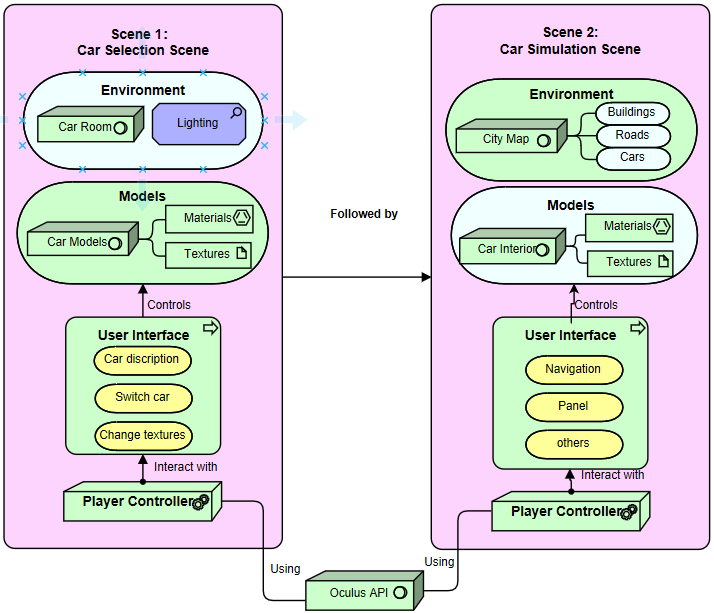

A user-friendly interface to select a car

- User-friendly elements include buttons for choosing a color for the car and arrows for navigating car options

- Users must also be able to view information about a car - it's specifications, description, and performance figures

-

A simulation of interacting with elements of the car

- Track user's hands and give visual feedback (if applicable) when interacting with elements of the car (like the steering wheel and other parts of the car that are visible to the user)

-

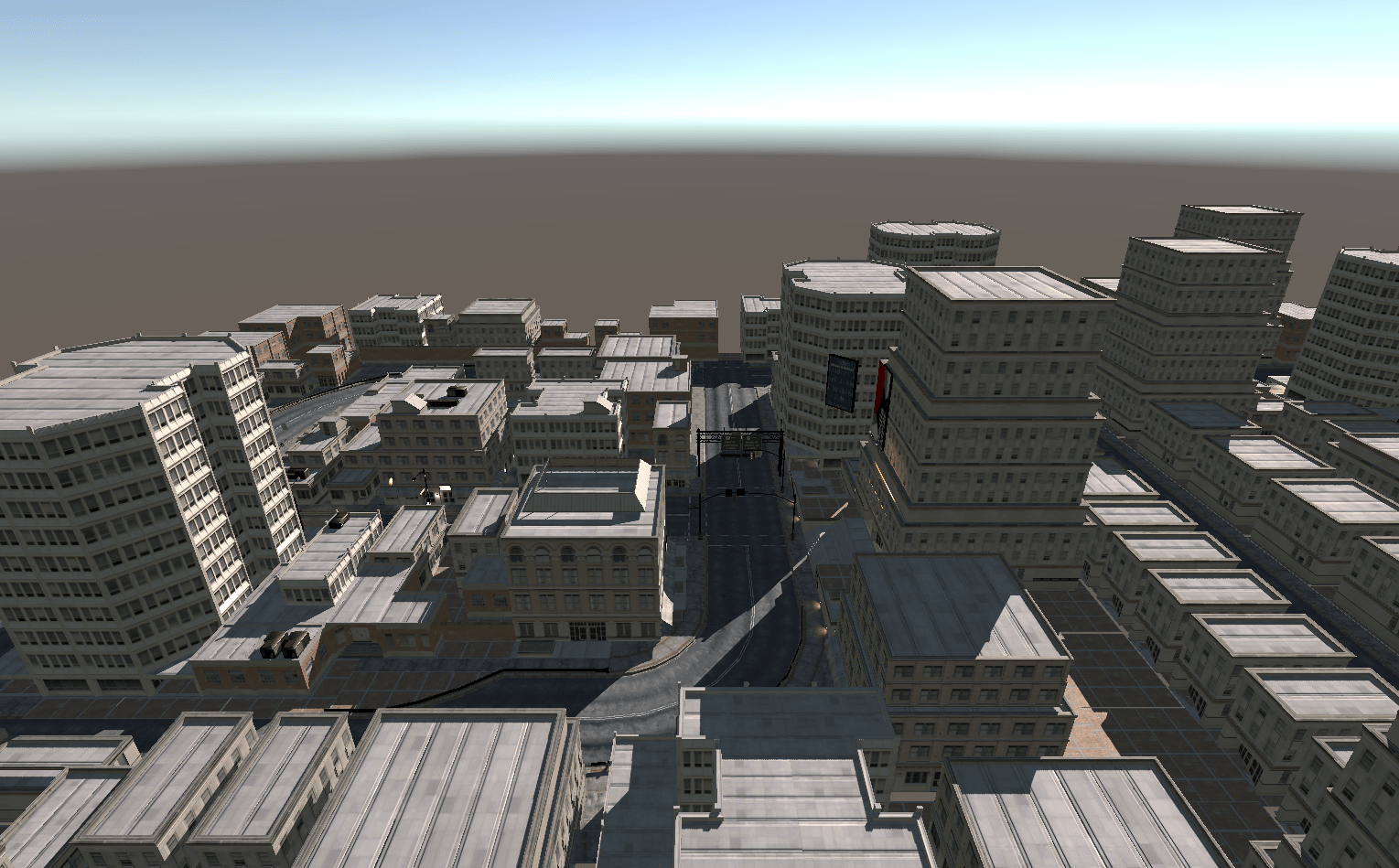

A feature-complete simulation of riding in a self-driving car

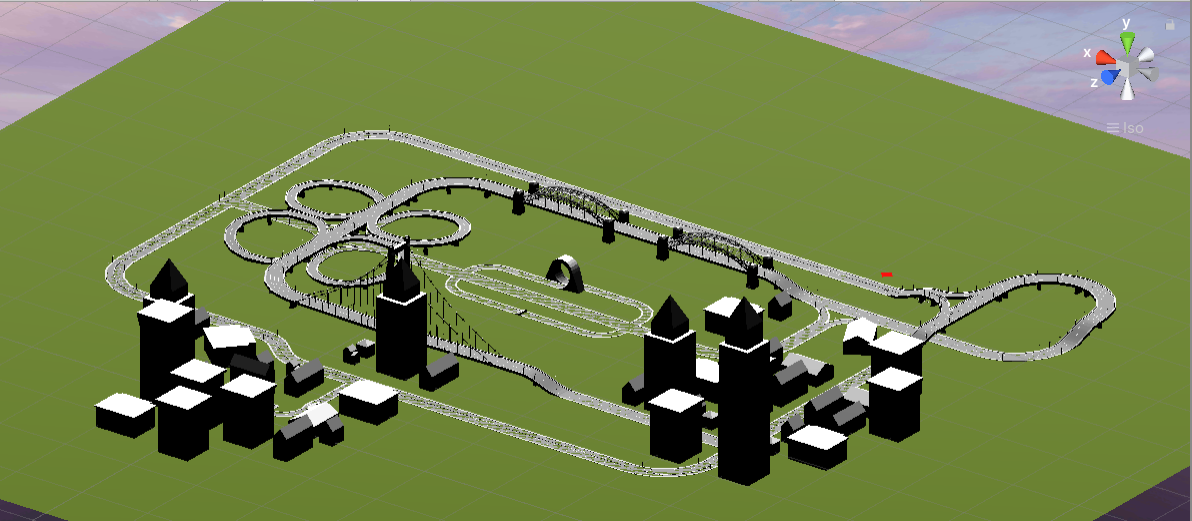

- Users must be able to see the car moving from a start point to a destination along a specific track

- The car must have basic elements of a car’s interiors; must have a driver's seat, a front-passenger seat, and a Dashboard/instrument panel with at least a speedometer

Should Have

-

A user-friendly interface to select a car

- Should allow users to select a car using Hand Tracking features like Gestures

- Users should be able to use gestures to switch between cars, view information about the cars, and also select a car

-

An interface for customising the car

- A menu that lists out all available cars with additional customising options for the car's exteriors (like the colour of the car and additional artistic elements like stickers)

-

A feature-complete simulation of riding in a self-driving car

- Users should be able to interact with the Dashboard/instrument panel (using Hand Tracking) and receive visual feedback

- Users should be able to look around (must be free to rotate and change the view) just like looking around in a real-life setting

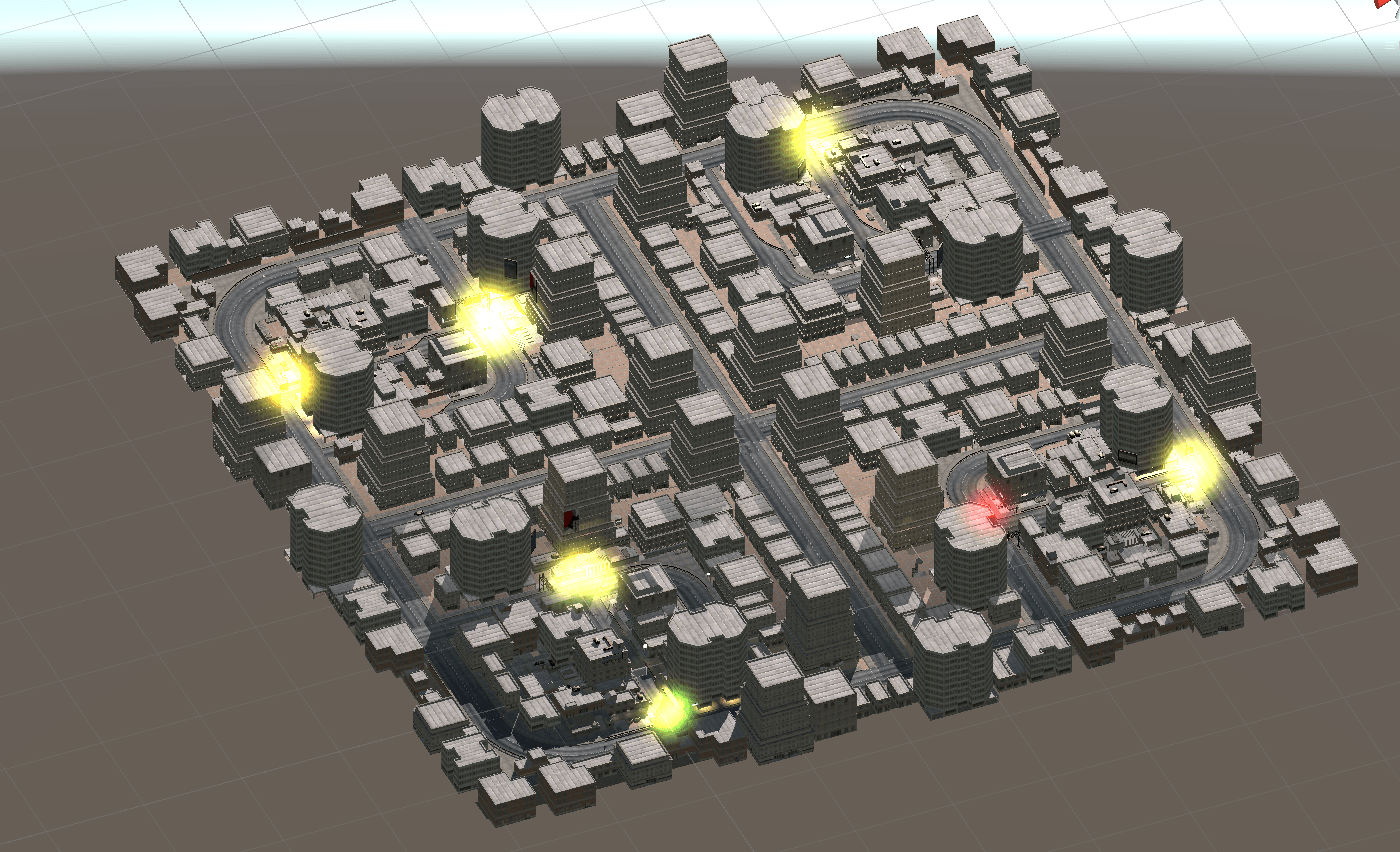

- The applications should have a feature-complete environment for the simulation similar to that of a city - with buildings, roads, highways, roundabouts, bridges etc.

Could Have

-

An interface for customising the car

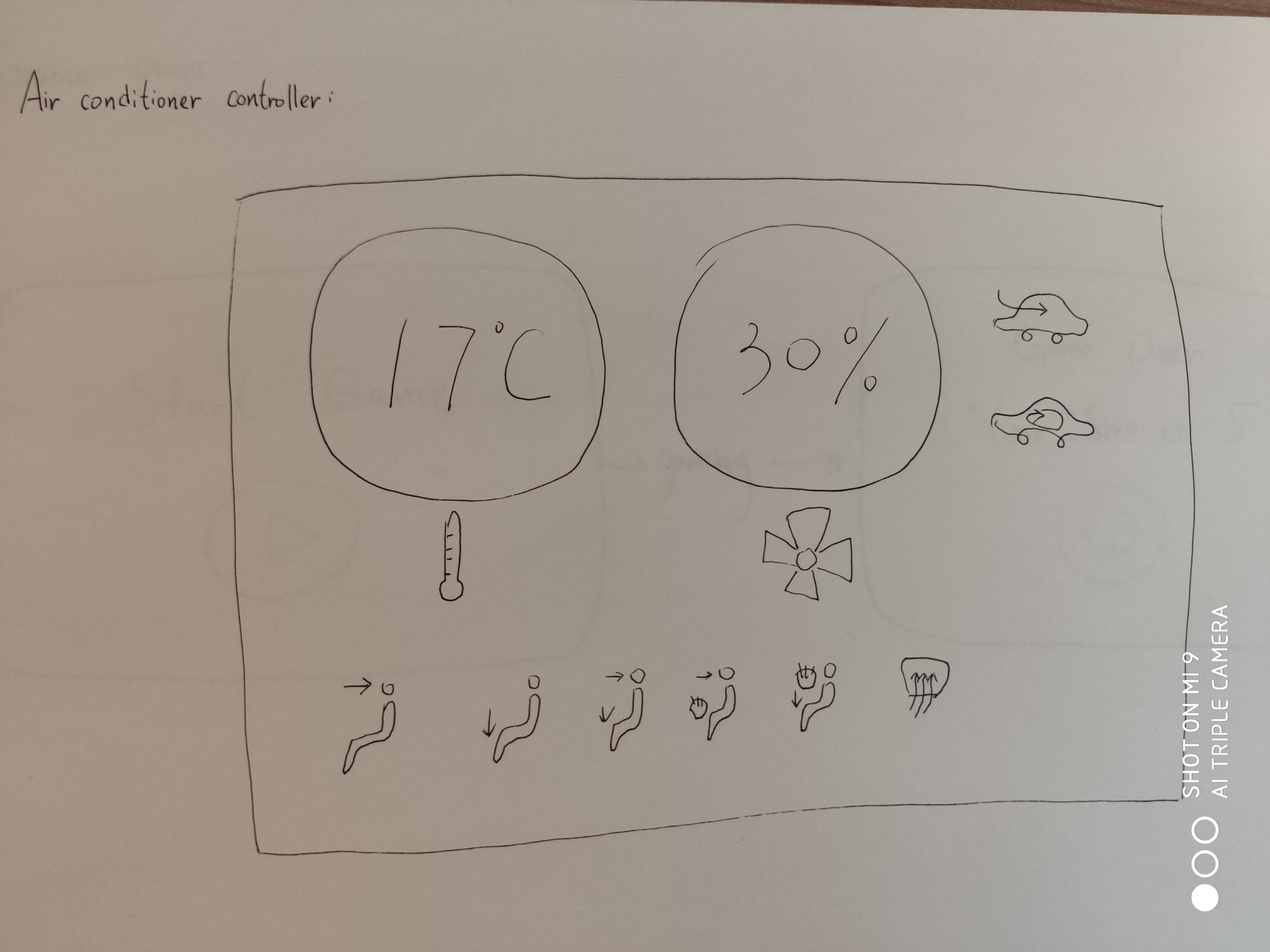

- Could have an option to customise the interiors of the car like customising the colour of the seats, the information displayed on the Dashboard/Instrument panel (could add fuel level and oil pressure along with a broad array of gauges and controls), along with options for climate control (like regulating the temperature of the car and the fan speed of the air conditioner)

-

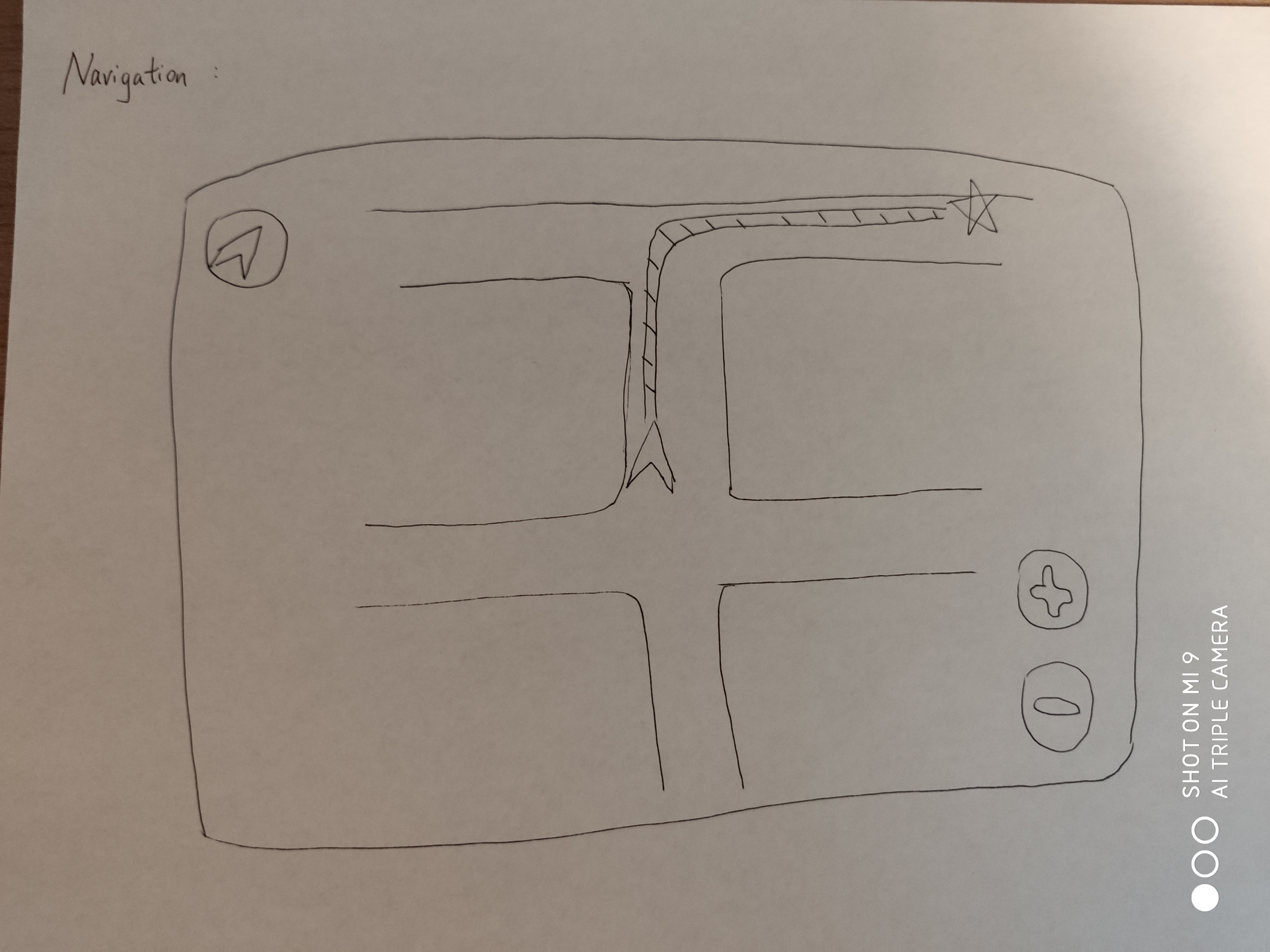

Options for customising the route that the car will take

- Could have a pathfinding algorithm that navigates the car from a starting point to a destination

- Could have options that allow the user to choose a starting point, a destination, along with options for alternate routes and the estimated time each route will take

-

A feature-complete simulation of riding in a self-driving car

- A navigation system for the car - a virtual map with real-time updates of the car’s position

- The car could also have additional interior elements that may be found in fully autonomous (Level-5) cars in the future

-

An entertainment system for the car

- Could have a simple game and a music system

- The car could also have additional interior elements that may be found in fully autonomous (Level-5) cars in the future

Won't Have

- A Deep Learning algorithm that trains cars to navigate road courses

Our Users

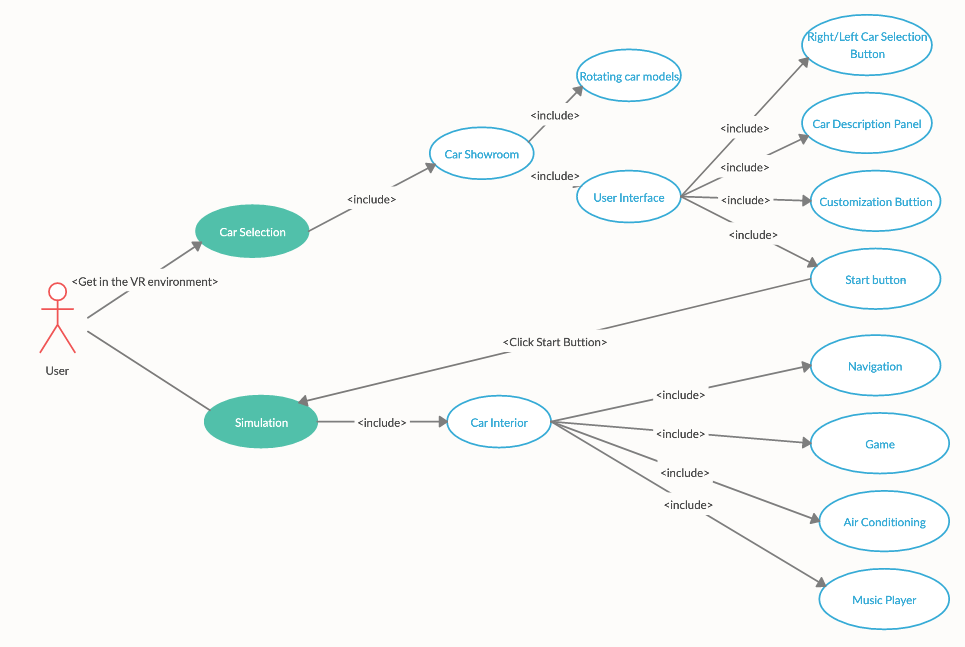

Use-Case Diagram